The Morality and Practicality of Tit for Tat

Nicole Player

Economists have long argued that Game Theory provides tools for understanding human interactions using mathematical models. In his tournaments testing various repeated Prisoner Dilemma strategies against one another, Robert Axelrod found that within Game Theory, ‘Tit for Tat’ is the most successful strategy for optimizing one’s outcome. Since the 1980s, Tit for Tat and its strict reciprocation have been referenced in thousands of literature pieces as the best policy for breeding cooperation in a self-interested Western society. However, thinking of Tit for Tat as a strategy to implement in everyday life invites moral and practical speculation regarding its retaliative nature. Thus, a more forgiving strategy, Generous Tit for Tat, and a subset of that strategy called Nice and Forgiving, are proposed to take over once Tit for Tat weeds out the ‘defectors’ of a society. Axelrod also proposes several ways for us to promote further cooperation in our communities today through policy and education.

1. Introduction

One of the world’s first recorded law codes is the Code of Hammurabi, which dates back to 1870 BC. The code follows the principle of lex talionis, the law of retributive justice often associated with the modern saying ‘an eye for an eye’ (Arce 2010). King Hammurabi preached strict reciprocity and harsh punishment for crimes committed, and while some of this swift justice is unacceptable in Western societies today, the idea of fairness and reciprocation that came with it still remains (Devm 2021). ‘An eye for an eye’ is still preached as the best strategy for interacting with others and keeping the peace, perhaps most vehemently in the form of a strategy of economic game theory called ‘Tit for Tat’ (TFT).

TFT is a strategy option in a repeated Prisoner’s Dilemma game in which a player starts by cooperating with their opponent and then does whatever the opponent did on the previous move for the rest of the game, thereby maintaining trust with those who deserve it and punishing betrayers. Political scientist Robert Axelrod hails the success of this strategy in his 1984 book, The Evolution of Cooperation. Axelrod exalts TFT as a method for how we should live our lives in a capitalist world based on incentives and self-prioritization in the hopes that we may optimize cooperation without exploitation. Although his findings have convinced many of TFT’s efficacy, there are other experts who question its success, its practicality in the real world, and its morality. This article outlines theories and experiments regarding TFT in Prisoner’s Dilemma situations and explores how it can be applied to modern Western society. It will then suggest a potentially better strategy to live by, and additional methods for inducing cooperation in a self-interest-dominated culture.

2. A Brief Recap of Game Theory

Game theory gained prominence in the mid-1900s as a tool created by John Nash and other economists to explain, predict, and guide the behavior of rational and self-interested individuals during strategic interactions (Aldred 2019). At the most basic level, it is characterized by a defined set of players, strategies, payoffs, and information. Typically, economists will attempt to simplify a game theory model by assuming a two-player symmetric game. In this model, two players have two possible identical strategies (which usually embody a cooperate option and a defect option) and there are four different payoffs for each player depending on what they do and what their opponent does (Duersch et al. 2014). To make analysis tractable, game theorists impose simplifying assumptions such as symmetric payoffs, complete information, and simultaneous action. All of these assumptions make it difficult to apply game theory to the real world, in which interactions are a lot more fluid and varied and it is difficult to measure the payoff (or utility) a person receives from each interaction. However, even if rational players in game theory are unlike real humans, the practice of game theory can still provide us with a lot of insight into social interactions (Aldred 2019). One way in which game theory has been especially useful is through the discovery and exploration of the Prisoner’s Dilemma.

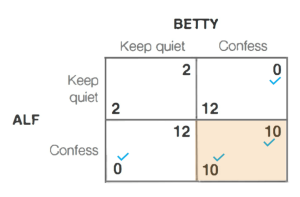

The Prisoner’s Dilemma, introduced first by Albert Tucker in 1950, is a situation in which defection is always individually the better option for each party involved. The Prisoner’s Dilemma is usually outlined by a two-player symmetric game. It was first proposed as a model in the context of two gang members, Alf and Betty, who are asked to rat each other out for their crimes. Both are told that if they confess and implicate their fellow member, they will receive immunity from prosecution while their former partner will get a ten-year sentence. If they both stay silent, they will each be given a two-year sentence for a minor crime. If both confess, however, the immunity deal is off and they will both be sentenced to eight years (Gaus 2008). This and every Prisoner’s Dilemma is structured so that no matter what Alf does, Betty will be slightly better off if she chooses to defect and betray Alf’s trust rather than keep it. The same is true for Alf, and so in a one-shot game, if both are rational and self-interested both will choose to defect. This defection will leave both players worse off (for the gang example, with eight years of prison rather than two) than if they had cooperated.

Prisoner’s Dilemma

We see the Prisoner’s Dilemma show up on a broader scale everywhere in our capitalist, democratic society, and it does not have to be limited to two players. Economist Amartya Sen (1970) references the Prisoner’s Dilemma as a contributor to a “liberal paradox” in which letting humans exercise more freedom of choice may lead to suboptimal outcomes. For example, if five smokers in a room have the choice to smoke or not smoke, the outcome of no one smoking is far better than the outcome of everyone smoking up the place, but everyone will still smoke because they think about what is individually better for them; hence, a Prisoner’s Dilemma takes place when there are no incentives against it (De Bruin 2005). Importantly, though, the Prisoner’s Dilemma is far more likely to end poorly in a one-shot game. When the game is played multiple times between the same people, a much wider sequence of strategies comes into play. One might choose to defect every time or cooperate every time. One might cooperate until the other player defects and then defect for the rest of the rounds out of revenge. There are many ways to play a repeat-interaction Prisoner’s Dilemma game. Robert Axelrod set out to find which one is the most successful in getting a player the most utility.

3. Axelrod’s Findings

In the early 1980s, Axelrod invited fourteen experts in game theory to submit programs for his Computer Prisoner’s Dilemma Tournament, each encoding and implementing their own strategies. He used a round-robin tournament style that put each strategy against all the other strategies, against itself, and against a random program (one with equal probabilities of cooperating and defecting), with each game consisting of exactly 200 moves. The payoff matrix gave both players 3 points for mutual cooperation and 1 point for mutual defection. If one player defected while the other player cooperated, the defecting player received 5 points and the cooperating player received 0 points (Axelrod 1984, 30-31).

To Axelrod’s surprise, the winning strategy was not one that exploited cooperators, but the strategy of pure reciprocation submitted by Professor Anatol Rapoport of the University of Toronto: Tit for Tat. In a game of 200 moves, a score of 600 would have been very good, meaning that the two players always cooperated, and a score of 200 would have meant that they always defected. TFT averaged 504 points per game (Axelrod 1984, 33). To ensure that the strategy’s success was not a fluke, Axelrod redid the experiment and this time had sixty-two entries from six countries, all of whom were well aware that TFT was the strategy to beat. Incredibly, TFT came out as the most effective strategy once again. Axelrod (1984, 48) then created six variants of the rules of the tournaments to test the robustness of TFT, and it placed first in five out of six of them.

4. The Advantages and Limits to TFT’s Success

Since Axelrod’s findings on TFT were published in 1984, they have been cited thousands of times in the fields of economics, politics, psychology, philosophy, and even evolutionary biology (Kramer et al. 2001). TFT was even claimed to be used by a winner of the reality TV show Survivor, Yul Kwon, as a strategy to get to the end while still fostering trust (Levitt 2021). Many written pieces take Axelrod’s experiments as proof that direct reciprocity beats all other communication strategies, while other authors are more skeptical of his findings. This raises the question: how successful and practical is TFT really in Prisoner’s Dilemma situations and beyond?

Axelrod gives his own initial reasoning for the efficacy of direct reciprocation. In The Evolution of Cooperation, he reasons that “what accounts for Tit for Tat’s robust success is its combination of being nice, retaliatory, forgiving, and clear,” which we can further break down to explain the efficacy of this particular strategy (Axelrod 1984, 54). TFT always starts with cooperation. When pitted against any strategy that also prefers cooperation (a ‘nice’ strategy), it has no incentive to defect and can earn many points thanks to the cooperation of the two strategies for the entire game. When playing with an opponent that initiates defection (a ‘mean’ strategy), its immediate retaliation discourages the other side from persisting whenever defection is attempted. If said player returns to cooperating, TFT’s forgiveness thereafter helps restore mutual cooperation with the player. And lastly, TFT’s simplicity makes it reliable and trustworthy to others, allowing for long-term cooperation (Axelrod 1984, 54).

All of this makes TFT a very sturdy strategy over time, but there is one critical limitation that often goes ignored: it never actually wins against another player. Since TFT gains the same points as its opponent for every cooperation, the opponent gains the upper hand on the first defection, and TFT can never get back on top (Kopelman 2020). Therefore it is doomed in every individual interaction to either elicit a draw with eternal mutual cooperation or to lose. Amnon Rapoport, Darryl Seale, and Andrew Colman (2015) argue that TFT would not have had any success if not for Axelrod’s ’round-robin tournament’ style, which Axelrod maintained even for his additional tournament simulations. If Axelrod had chosen a ‘knockout tournament’ style in which participants were kicked off every time they lost to an opponent, TFT would have had no shot. Even if he had used a ‘chess tournament’ style in which participants receive 1 point for each win, half a point for each draw, and no points for each loss, TFT would have been at the bottom of the pack. TFT only worked consistently due to Axelrod’s method: everyone plays everyone, and points are given for each round rather than for each 200-round game.

While this conclusion may call into question TFT’s practicality in one-time interactions, it is important to note that it was not designed for these instances. Anatol Rapoport began experimenting with the Prisoner’s Dilemma in 1962 and had a deep understanding of non-zero-sum games. He entered TFT into Axelrod’s tournament with the round-robin style in mind, knowing that while TFT was individually weak, it was strong against the masses (Kopelman 2020). Rapoport reasoned that other strategies may be individually strong against the strategies they are specifically designed to expose and defeat. But when pitted against each other, these strategies severely reduce each other’s scores. TFT, on the other hand, can never lose by more than one play, and so it gains many points when it competes with nice strategies and it is not as badly beaten down against mean strategies (Kopelman 2020).

Axelrod had similar revelations to Rapoport and notes that TFT had to work with a sufficiently large cluster of nice strategies in order to be successful in his tournament. If TFT goes against a mean strategy that only defects, it will lose the battle, but when paired with enough nicer strategies it will gain more points than this defector and win the war. Axelrod (1984, 64) applies this to everyday human interaction to say that a group of people who value reciprocity can easily weed out greedy exploiters to develop a society based on trust and cooperation. He also simulated the ecological success that TFT could have; when its rules are passed down for generations, it will continue to proliferate through natural selection until the entire community follows its simple philosophy of retaliation and forgiveness (Axelrod 1984, 51).

Axelrod’s conclusions are very hopeful, but they tend to leave out the necessary transition of TFT from use in game theory to real-world use. We live in a mistake-ridden world, and TFT could be an impractical strategy due to misinformation (Kramer et al. 2001). It is often possible that real-life people will defect by mistake, such as if a friend ignores your calls and you consider this a betrayal when, actually, that person’s phone was dead. When a person who defects has true cooperative tendencies but there is a miscommunication, a defection by someone with a TFT strategy could signal to the accidental defector that the TFT-using person is not interested in maintaining cooperation, and trust is lost. Moreover, when both people are TFT users, one defection will create a snowball of defections that can’t be escaped, creating a never-ending feud between players (Axelrod 1984, 138). Therefore, TFT has a greater risk of fostering distrust and failing its initial goal of cooperation in a noise-filled real world than it would in a carefully constructed game.

Rapoport’s TFT has potential as a reliable approach to personal and global interactions, but it may need to be tweaked to account for miscommunications. We also need to limit TFT’s proven success to only apply to scenarios where many people are interacting every day. Fortunately—when we are thinking about communities and bigger companies as a collective—repeated interaction is certainly the case, and TFT’s rule of reciprocity should prove very effective in these environments even with the occasional slip-up. Whether this rule is the most ethical option, however, is more questionable.

5. The Moral Dilemma of TFT

Interestingly, Axelrod’s main example of the effective use of TFT is not even an example of civil life, but an example of warfare. In The Evolution of Cooperation, he extensively references the situation of trench warfare during World War I. During this bitter conflict, it was common for one side to refrain from shooting the other with the goal of killing, provided that the other side reciprocated. Axelrod hypothesizes that the prolonged interactions between the two soldiers allowed for empathy between them as well as a desire to collaborate and preserve lives, even when this meant going against higher orders (Axelrod 1984, 129). He calls this the “live and let live” scenario and claims it “demonstrates that friendship is hardly necessary for the development of cooperation”(Axelrod 1984, 21-22). The trench warfare example is an appropriate situation for the ‘an eye for an eye’ mentality to take place, but morality is valued higher in the modern civilized world compared to this exception state.

Immanuel Kant proposed that the supreme principle of morality is a principle of practical rationality; he dubbed this the Categorical Imperative. The Categorical Imperative says that one should act only in accordance with a maxim through which one can at the same time will it to become a universal law. In other words, you should always only treat a person how you would wish everyone to be treated, including yourself (Jankowiak). The Categorical Imperative shares some features with what is commonly called the Golden Rule: Treat others as you’d want to be treated. This rule is the baseline for what many Western parents teach their children, and it implies a general egalitarian view of people as moral equals. Using the Golden Rule in a repeat Prisoner’s Dilemma game would seem to imply that we should always cooperate without the added aspect of revenge. We are always better off if the other player cooperates, so by Kant’s Categorical Imperative and the Golden Rule, it makes moral sense for us to reciprocate this cooperation and maintain trust and community.

Hannah Arendt also weighs in on cooperation in political life. A prominent German 20th-century political philosopher, Arendt emphasizes fostering a community of ‘togetherness’ to allow for positive human activity and political discourse. She specifically cites forgiveness “in its power to free and to release men and women from the insolvable chaos of vengeance, so that they may reconstitute a community for living together on an entirely new basis” (Chiba 1995). In believing that forgiveness is key to a strong community, Arendt would also likely believe that consistent cooperation is the most moral and also most beneficial strategy to live by.

TFT implements some of these moral principles, but it also brings along with them the element of quick retaliation. This has led it to be comparable to a mafia strategy; the Mafia will cooperate with others but administers swift punishment to those who cross it. Just as the Mafia can operate without written statutes and law, a community of people practicing pure reciprocity “need not rely on external pressures such as law, coercion or social convention to sustain cooperation”(Aldred 2019). But then again, most people would not consider the Mafia to be moral. Axelrod acknowledges the moral dilemma of TFT himself in his book, saying, “Tit for Tat does well by promoting the mutual interest rather than by exploiting the other’s weakness. A moral person couldn’t do much better. What gives Tit for Tat its slightly unsavory taste is its insistence on an eye for an eye. This is rough justice indeed” (Axelrod 1984, 137). While TFT does eventually forgive, it does not do so without an initial punishment. In a perfect world, therefore, TFT would not measure up morally to the simple strategy of constant cooperation.

Granted, we do not live in a perfect world. We live in a society where self-interest is often rational because it is awarded monetarily, and thus we face many defectors that will exploit our kindness if we never defend ourselves. Axelrod is right in saying that rough justice is necessary to show defectors that you cannot be exploited, in a game setting and in real life. Therefore, a compromise between efficiency and morality is necessary.

6. An Alternative: Making TFT More Generous

To make up for the pitfalls of TFT’s unyielding retaliation, a modified version that leans more on the side of forgiveness is warranted. The general format for this modified version is called ‘Generous Tit for Tat’ (GTFT), in which a player begins with forgiveness and, once defected against, only defects back a certain percentage of the time, giving the defector a better chance to redeem themselves (Kay 2013). The TFT strategy often devolves into mutual defection for the rest of the game, but GTFT opens the door to pull the game out of this uncooperative cycle. GTFT not only helps to make up for misinformation in the real world, but also is kinder and closer to the moral standard to which most people wish to hold themselves. That being said, it still comes with an element of justice being exacted; a person practicing GTFT will not be taken advantage of once defectors are made aware that he or she is still capable of retaliation. Axelrod (1984, 138) himself sees GTFT as a potentially better strategy, saying “It is still rough justice, but in a world of egoists without central authority, it does have the virtue of promoting not only its own welfare, but the welfare of others as well.” The world today does indeed have many egoists, but laws and customs also place value on kindness and forgiveness, so GTFT should work quite well as a general basic strategy to adopt.

There are many forms of GTFT that could have varying levels of success. Axelrod proposes a harsh version that reciprocates a defection nine-tenths of the time. ‘Tit for Two Tats,’ submitted to Axelrod’s second tournament by evolutionary biologist John Maynard Smith, is a far more generous strategy that defects only after having been defected against twice in a row. The strategy I focus the most on, however, is ‘Nice and Forgiving.’

‘Nice and Forgiving’ continues to cooperate as long as their opponent’s cooperation rate stays above 80%. If the rate dips below this threshold, ‘Nice and Forgiving’ retaliates with a defection, but reverts back to cooperation as soon as its opponent gets back above the threshold. An experiment conducted at Stanford University in 1991 found that ‘Nice and Forgiving’ came out on top in a round-robin style tournament that intended to simulate a “noisy world” (Kramer et al. 2001). The tournament added noise by asking each participant their desired level of cooperation, from 0 to 100%, and then adding or subtracting a randomized error term to this level. In this tournament, the 13 participants had prior knowledge of TFT’s success, so most of their strategies were variants of TFT with modifications that attempted to adjust for the added noise. Because of this, most strategies were ‘nice’ by Axelrod’s terms, but only 7 were considered ‘generous’ (meaning forgiving). TFT placed only 8th out of 13 in this tournament. As expected, when the error term was added to make a nice player’s cooperation less than 100% of the time, in any instance that TFT played a strategy like itself the first ‘accidental’ defection created a cycle of mutual punishment (Kramer et al. 2001).

So long as they return to a certain level of cooperation, ‘Nice and Forgiving’ allows strategies that intend kindness but make the occasional mistake to have redemption. Its generosity tends to dampen cycles of unintended and costly vendettas. That being said, ‘Nice and Forgiving’ is a unique GTFT strategy in that it knows a lost cause when it sees one, and continues to defect after cooperation dips below 80% so that it is not exploited too badly. The Stanford experiment shows that when we try to simulate the real world, being generous to a point actually can be the most effective strategy so long as there are many other nice strategies there to support that generosity.

7. Transitioning from TFT to GTFT in a Selfish World

In the capitalist domain that many societies find themselves in today, an ‘every person for themselves’ mentality is easily fostered. The strategy of GTFT in these “kill or be killed” situations would be considered a strategy for “suckers” that would warrant a loss of self-respect (Schedler 2020). There are many egoist personalities that would be more than willing to exploit overly generous players to come out on top. That being said, there are also many people who are inclined to always cooperate. This inclination may boil down to a need for a good reputation, ingrained altruistic values from factors like religion and parenting, or plain old sympathy.

Of course, it was still TFT, not GTFT, that won Axelrod’s tournaments and proved itself the most effective. The GTFT variant ‘Tit for Two Tats’ placed only 24th in his second tournament, which had many more exploiting players than the first one. However, Axelrod (1984, 46-47) calculated that ‘Tit for Two Tats’ would have won the first tournament if it had been a strategy played because the first tournament had far more forgiving players. In the Stanford experiment, they also found that ‘Nice and Forgiving’ came out on top because most of the competing strategies favored cooperation (Kramer et al 2001). This gives us insight into the dynamics between TFT and GTFT in the real world. As discussed in Section 4, TFT still needs a substantial number of nice strategies to work with in order to defeat meaner strategies. But with this cooperative bolster, it can be a secret weapon for taking out mean strategies over time with its swift retaliation.

TFT may therefore be the most effective strategy if we start from a point where morality is less valued and there are many defectors, but once it weeds out those defectors, we can slowly switch over to GTFT, putting more trust in each other to cooperate. To quote Nowak and Sigmund (1992) in their paper on evolutionary game theory:

An evolution twisted away from defection (and hence due to TFT) leads not to the prevalence of TFT, but towards more generosity. TFT’s strictness is salutary for the community, but harms its own. TFT acts as a catalyser. It is essential for starting the reaction towards cooperation. It needs to be present, initially, only in a tiny amount; in the intermediate phase, its concentration is high; but in the end, only a trace remains.

We can thus appreciate TFT’s quick, unfailing justice when our society is untrustworthy and needs tough love, such as in the earlier example of trench warfare, but GTFT is what we can evolve into in order to cultivate the trust that TFT mobilizes.

8. Axelrod’s Strategies for the Promotion of Cooperation

Axelrod’s findings regarding the efficacy and robustness of TFT fuel his belief that it would be an invaluable principle for the world to go by on both a microeconomic and macroeconomic scale. However, knowing Rapoport et al.’s (2015) critique, it must be clarified that this is only when there are repeated interactions between multiple people. The practicality of TFT, and even of GTFT, lessens with one-on-one single interactions where one person can easily exploit another without being held accountable. In addition to this, we have to remember that humans are not the rational agents studied in game theory. We do not fully weigh our options, we make mistakes, and we are raised with a plethora of biases and stereotypes that we must stumble through.

Being aware of the situations that foster generosity and the situations that do not, Axelrod outlines a few strategies to promote cooperation without exploitation in our society, and he tries to do this without putting the task squarely on our irrational shoulders. These strategies include enlarging the shadow of the future, changing payoffs, and teaching reciprocity and altruism early.

8.1 Enlarging the Importance of the Future

A key argument of Axelrod’s is that mutual cooperation is only sustainable if the future is sufficiently important relative to the present. If we are not sure that interactions will continue to take place between two players, TFT’s threat of retaliation will not be taken as seriously and players with defective tendencies will feel less pressure to cooperate in the present. Thus, the more importance we place on the future (in economic terms, the higher the discount factor w) the more possible it is for cooperation to persist (Axelrod 1984, 126). One way to increase the shadow of the future is by making interactions more durable. Trench warfare is a clear example of this durability. The troops in World War I knew they were not going to be going anywhere for a while. With any shooting would come an easy retaliation, and this made it easier to form an agreement and work together (Axelrod 1984, 129). Another way to make the future more certain is to make interactions between players more frequent, with interactions occurring more often and closer together. This can happen when two companies are big players in an oligopoly and can keep others out of the competition. When they are the only two players in the market, their interactions with each other become more isolated and more frequent, so they can find it easier to work together to keep them both on top. It is also useful to decompose interactions by breaking down issues into smaller pieces and thus meeting more often to discuss these issues. The more interactions between people, the more each move made matters and is considered in light of future interactions (Axelrod 1984, 129-130). When we make it clear that interactions will continue for a long time and many times in the future, whether it be in warfare or through a friendship, we are encouraged to keep cooperation going indefinitely.

8.2 Changing the Payoffs

A second strategy proposed by Axelrod involves making the Prisoner’s Dilemma less of a dilemma by changing the payoffs of each outcome. The key problem of the Prisoner’s Dilemma is how appealing defection is to the rational agent. However, if the government passes laws and mandates to punish such defection, it lowers its appeal (Axelrod 1984, 133). Some current examples of this are the masking, testing, and vaccination policies decreed by governments over the last three years of the Covid-19 pandemic. Without government intervention, the public Prisoner’s Dilemma causes the incentive to vaccinate and test to be very low. While none of us want to get Covid and we know that vaccinating would contribute to public health, the costs of doing so add up. Vaccination is difficult to schedule, can be expensive, and takes time out of our days, and there have been many conspiracies around it saying it could make us very sick or even inject some sort of tracker in us. This makes it easy for people to fall into the tragedy of the commons and then think they can free-ride off of the herd immunity generated when everyone else gets vaccinated, which of course leads to no one getting vaccinated (Roberts 2020). But when the government mandates vaccines and testing as a requirement to go into public places and events, and also makes these vaccinations free and as accessible as possible, both explicit and implicit costs of vaccination start to go down, and the Prisoner’s Dilemma weakens. More people ‘cooperate’ and get vaccinated, and if others are using GTFT-related strategies they do the same. With over 81% of the American population now having received at least one Covid-19 vaccination dose by February 2023, we can see that the pandemic is a great example of how changing the payoffs can lead to more cooperation in a society of public domains (CDC 2023).

8.3 Teaching Altruism and Reciprocity Early

A final way to promote cooperation that Axelrod proposes is by teaching children altruism and reciprocity as key values from a young age. Parents should lead by example and teach kindness and compassion to their children when they are most impressionable, and the same values should be taught to some extent in school (Axelrod 1984, 134). They should also make their children aware that there is always the possibility they will come across an egoist defector (a liar, cheater, etc.) and that they should be inclined to forgive this person for their faults but be willing to retaliate if necessary so as to not to be exploited (for example, to tell a teacher if another child is unkind to others) (Axelrod 1984, 136). As discussed in Section 7, the role of reputation in society also encourages an inclination to be altruistic. Whether it is on social media or within our close circle of friends, we want to be seen as good people that others can trust, so we are naturally inclined to be cooperative and reciprocating with everyone we meet. We have a tendency to want to fit in with society, and so a strategy like GTFT naturally manifests itself because we cooperate when others cooperate and defect (unless we worry too much about our reputation) when others defect. There are many ways in which altruism and reciprocity are ingrained in our culture already, but the increased teachings of these subjects by parents to their children can only help rather than hurt.

9. Conclusion

In a one-shot Prisoner’s Dilemma game, we can see how rationality and morality always clash. The rational action would be to defect, while the moral action (most people can agree) would be to cooperate. But when the game is played continuously, it may become more possible for rational decisions to be more and more cooperative. TFT has proven itself in game theory, maintaining trust with the nice while weeding out the mean to promote an overall more cooperative society. That being said, TFT may have worked in an unforgiving environment such as trench warfare, but in the real world, it is both a difficult and harsh mentality to operate with. Once this strategy of cooperation with unflinching retaliation has done its job, we can start easing up our behavior to fit more with many philosophical and religious views of morality using variants of ‘Generous Tit for Tat,’ such as ‘Nice and Forgiving.’ We can also promote a more altruistic and reciprocating society by using prolonged, frequent, and familiar interactions, implementing laws and norms to change payoffs, and teaching selfless values early on. As a rational economic model, game theory can only do so much to advise us on real-world policies and social interactions. And yet, the theories that experts from a dozen disciplines deduce from it do seem to have produced great insights and have shown the potential to make our polarized, capitalist nation’s future less bleak.

References

Aldred, J. (2019). Licence to Be Bad: How Economics Corrupted Us. Allen Lane.

Arce, D. (2010). Economics, Ethics and the Dilemma in the Prisoner’s Dilemmas. American Economist, 55(1), 49–57.

Axelrod, R. (1984). The Evolution of Cooperation. Basic Books.

CDC. (February 3, 2023). Percentage of U.S. population who had been given a COVID-19 vaccination as of February 2, 2023, by state or territory [Graph]. In Statista. Retrieved February 7, 2023, from https://www.statista.com/statistics/1202065/population-with-covid-vaccine-by-state-us/.

Chiba, S. (1995). Hannah Arendt on Love and the Political: Love, Friendship, and Citizenship. Cambridge University Press.

De Bruin, B. (2005). Game Theory in Philosophy. Topoi: An International Review of Philosophy, 24(2), 197-208.

Devm (2021). An Eye for an Eye, a Tooth for a Tooth: Analysis of Hammurabi’s Code. Jus Corpus Law Journal, https://www.juscorpus.com/an-eye-for-an-eye-a-tooth-for-a-tooth-analysis-of-hammurabis-code/.

Duersch, P., J. Oechssler, and B. Schipper (2014). When Is Tit-For-Tat Unbeatable? International Journal of Game Theory, 43(1), 25-36.

Gaus, G. (2008). On Philosophy, Politics, and Economics. Thompson Wadsworth.

Jankowiak, T. Immanuel Kant. Internet Encyclopedia of Philosophy, https://iep.utm.edu/kantview/.

Kay, R. (2013). Generous Tit for Tat: A Winning Strategy. Forbes, 4 Nov, https://www.forbes.com/sites/rogerkay/2011/12/19/generous-tit-for-tat-a-winning-strategy/?sh=260cf62466eb.

Kopelman, S. (2020). Tit for Tat and Beyond: The Legendary Work of Anatol Rapoport. Negotiation Conflict and Management Research, 13(1), 60-84.

Kramer, R., Wei, J., and Bendor, J. (2001). Golden Rules and Leaden Worlds: Exploring the Limitations of Tit-for-Tat as a Social Decision Rule. In J. Darley, D. Messick, and T. Tyler (Eds.), Social influences on ethical behavior in organizations, 177-200. Lawrence Erlbaum Associates Publishers.

Levitt, S. (2021). Robert Axelrod on Why Being Nice, Forgiving, and Provokable are the Best Strategies for Life. People I Mostly Admire Podcast. Retrieved May 14, 2022, from https://people-i-mostly-admire.simplecast.com/episodes/robert-axelrod-on-why-being-nice-forgiving-and-provokable-are-the-best-strategies-for-life.

Nowak, M., and Sigmund, K. (1992). Tit for Tat in Heterogeneous Populations. Nature, 355, 250-253.

Rapoport A., Seale, D., and Colman, A. (2015). Is Tit-for-Tat the Answer? On the Conclusions Drawn from Axelrod’s Tournaments. PLoS ONE 10(7), e0134128.

Roberts, S. (2020). The Pandemic Is a Prisoner’s Dilemma Game. The New York Times. https://www.nytimes.com/2020/12/20/health/virus-vaccine-game-theory.html.

Schedler, A. (2020). Democratic Reciprocity. The Journal of Political Philosophy, 29(2), 252-278.

Sen, A. (1970). The Impossibility of a Paretian Liberal. Journal of Political Economy, 78(1), 152-157.